import numpy as np

import pandas as pd

from scipy.stats import binom

from scipy.stats import uniform, bernoulli

from scipy.stats import beta

from scipy.stats import betabinom

import numpy as np

import matplotlib.pyplot as plt

from scipy.stats import norm

import numpy as np

rng = np.random.default_rng()

plt.rcParams["figure.figsize"] = (5,3)I’ve always struggled to understand how Bayesian inference worked, what was the difference between events and random variables, why sampling was needed, how it was used for regression and more.

As always, to start understanding things better, I code them from scratch in Python :)

Bayes for a set of random events

My own way of understanding Bayesian Inference. Heavily based on: - My examples are partially taken from: https://www.bayesrulesbook.com/chapter-6.html - My understanding is coming from: https://xcelab.net/rm/statistical-rethinking/

1. You have some prior knowledge about the probability of each random event

There is a set of events for which we have some a-priori knowledge of probabilities.

For example, let’s take the following situation:

You’re about to get on a plane to Seattle. You want to know if you should bring an umbrella. You call a friendof yours who live there and ask if it’s raining. Your friend has a 2/3 chance of telling you the truth and a 1/3 chance of messing with you by lying. He tells you that “Yes” it is raining. What is the probability that it’s actually raining in Seattle?

We are interested in the probabilities of 2 events: - Event “Rain” - Event “NoRain”

You might not know anything about the probabilities of each of these events, but you might have some a-priori. Either way, you can express your current belief in probabilities of each of those events.

We can visualize our current, our “prior” knowledge with a graph like the following

probabilities = pd.DataFrame({

'events': ['Rain', 'NoRain'],

'prior_knowledge': [0.5, 0.5],

});

probabilities| events | prior_knowledge | |

|---|---|---|

| 0 | Rain | 0.5 |

| 1 | NoRain | 0.5 |

2. You have an additional knowledge: the relationship between another event happening and the probabilities of events Rain and NoRain

Now, there is another event happening: you call a friend and he tells you that it is raining. And you know (maybe from past experiences) that this friend is lying 1/3rd of the time.

The event happening: “Calling a 1/3rd lying friend that tells you it is raining”

Note something: this is another event, which happens “on top” of the event raining/not raninig. But there is a relationship between these events that (for some reason) you can exactly express mathematically.

The trick is to apply this relationship, and understand the likelihood of the event “Calling a 1/3rd lying friend that tells you it is raining” in each of the initial events: - Rain: in this situation there is 2/3 chances of the friend saying that it rains - NoRain: in this situation there is 1/3 chances of the friend saying that it rains

So we now have a sytem to tell, in each situtation “Rain” and “NoRain” of the initial event, what are the chances of observing this new event of the friend mentioning rain.

- The event “Calling a 1/3rd lying friend that tells you it is raining” is called the data

- Your prior expcations in the events “Rain”/“NoRain” is called the prior

I like to visualize this on top of the previous graph:

This drives home for me the fact that we have a pre-existing belief in the probabilities for each event, and that the new event happening has likelihoods of happening that can be different in each of the initial events.

# We observed the data when calling our friend

likelihood = pd.DataFrame({\

'events': ['Rain', 'NoRain'],

'likelihood': [2/3, 1/3], # We use our system, here logical thinking from what is said in the prompt, to link the observations to each event

});

likelihood| events | likelihood | |

|---|---|---|

| 0 | Rain | 0.666667 |

| 1 | NoRain | 0.333333 |

3. Now you combine both to answer the question “What are the chances of Raining if event”Friend telling rain” happened ?

What you can tell looking at the graph, is the chances of the friend saying “rain” if A or saying “rain” if B.

To apply Bayes Rule and answer the question, you need to restrict the events to only the “rain” - friend” events.

bayes = pd.concat([probabilities, likelihood.drop(columns=['events'])], axis=1)

bayes| events | prior_knowledge | likelihood | |

|---|---|---|---|

| 0 | Rain | 0.5 | 0.666667 |

| 1 | NoRain | 0.5 | 0.333333 |

bayes['posterior_unnormalized'] = bayes['prior_knowledge'] * bayes['likelihood']

bayes| events | prior_knowledge | likelihood | posterior_unnormalized | |

|---|---|---|---|---|

| 0 | Rain | 0.5 | 0.666667 | 0.333333 |

| 1 | NoRain | 0.5 | 0.333333 | 0.166667 |

bayes['posterior'] = bayes['posterior_unnormalized'] / bayes['posterior_unnormalized'].sum()

bayes| events | prior_knowledge | likelihood | posterior_unnormalized | posterior | |

|---|---|---|---|---|---|

| 0 | Rain | 0.5 | 0.666667 | 0.333333 | 0.666667 |

| 1 | NoRain | 0.5 | 0.333333 | 0.166667 | 0.333333 |

Finally, this way, we can answer that the probabilities of Rain given that the friend said that it was raining is 66%.

Bayes for more categories

From 2 categories, we can move to more categories

From : https://www.bayesrulesbook.com/chapter-2#michelle-simple

For example, suppose you’re watching an interview of somebody that lives in the United States. Without knowing anything about this person, U.S. Census figures provide prior information about the region in which they might live: the Midwest ( M ), Northeast ( N ), South ( S ), or West ( W ).16 This prior model is summarized in Table 2.5.17 Notice that the South is the most populous region and the Northeast the least (P(S) > P(N)). Thus, based on population statistics alone, there’s a 38% prior probability that the interviewee lives in the South

Now that we have the prior probabilities for each event, we need to observe some data. Here we will observe

# We observered the data when the person said "pop"

probabilities = pd.DataFrame({

'events': ['M', 'N', 'S', 'W'],

'prior_knowledge': [0.21, 0.17, 0.38, 0.24], # The likelihoods are derived from analysing data in each region.

});

probabilities| events | prior_knowledge | |

|---|---|---|

| 0 | M | 0.21 |

| 1 | N | 0.17 |

| 2 | S | 0.38 |

| 3 | W | 0.24 |

But then, you see the person point to a fizzy cola drink and say “please pass my pop.” Though the country is united in its love of fizzy drinks, it’s divided in what they’re called, with common regional terms including “pop,” “soda,” and “coke.” This data, i.e., the person’s use of “pop,” provides further information about where they might live. To evaluate this data, we can examine the pop_vs_soda dataset in the bayesrules package (Dogucu, Johnson, and Ott 2021) which includes 374250 responses to a volunteer survey conducted at popvssoda.com

likelihood = pd.DataFrame({

'events': ['M', 'N', 'S', 'W'],

'likelihood': [0.6447, 0.2734, 0.07922, 0.2943], # We use our system, here obserbing data in each region, to link the observations to each event

});

likelihood| events | likelihood | |

|---|---|---|

| 0 | M | 0.64470 |

| 1 | N | 0.27340 |

| 2 | S | 0.07922 |

| 3 | W | 0.29430 |

bayes = pd.concat([probabilities, likelihood.drop(columns=['events'])], axis=1)

bayes| events | prior_knowledge | likelihood | |

|---|---|---|---|

| 0 | M | 0.21 | 0.64470 |

| 1 | N | 0.17 | 0.27340 |

| 2 | S | 0.38 | 0.07922 |

| 3 | W | 0.24 | 0.29430 |

bayes['posterior_unnormalized'] = bayes['prior_knowledge'] * bayes['likelihood']

bayes| events | prior_knowledge | likelihood | posterior_unnormalized | |

|---|---|---|---|---|

| 0 | M | 0.21 | 0.64470 | 0.135387 |

| 1 | N | 0.17 | 0.27340 | 0.046478 |

| 2 | S | 0.38 | 0.07922 | 0.030104 |

| 3 | W | 0.24 | 0.29430 | 0.070632 |

bayes['posterior'] = bayes['posterior_unnormalized'] / bayes['posterior_unnormalized'].sum()

bayes| events | prior_knowledge | likelihood | posterior_unnormalized | posterior | |

|---|---|---|---|---|---|

| 0 | M | 0.21 | 0.64470 | 0.135387 | 0.479075 |

| 1 | N | 0.17 | 0.27340 | 0.046478 | 0.164465 |

| 2 | S | 0.38 | 0.07922 | 0.030104 | 0.106523 |

| 3 | W | 0.24 | 0.29430 | 0.070632 | 0.249936 |

Bayes for random variable, still discrete

We now have a system where, if we have prior events with prior probabilities, and some “data” event happening that relate to the prior events with some probabilities, we can derive a posterior where we update our knowledge on the prior events.

The Trick

Magically, we can use this exact system with a Statistical Model, and adapt it the following way: - The “prior events” are going to be a random variable of a statistical model - The “data events” are going to be observations you make of the statistical model outputs

probabilities = pd.DataFrame({

'events': ['0.2', '0.5', '0.8'],

'prior_knowledge': [0.1, 0.25, 0.65],

});

probabilities| events | prior_knowledge | |

|---|---|---|

| 0 | 0.2 | 0.10 |

| 1 | 0.5 | 0.25 |

| 2 | 0.8 | 0.65 |

the binomial model is the “system” we use to find the likelihood at each event, at each potential value of PI

# We observed 1 victory out of 6 games

likelihood = pd.DataFrame({

'events': ['0.2', '0.5', '0.8'],

'likelihood': [

binom.pmf(k=1, n=6, p=0.1), # We use our system, here the Binomial distribution, to link the observations to each event

binom.pmf(k=1, n=6, p=0.25), # We use our system, here the Binomial distribution, to link the observations to each event

binom.pmf(k=1, n=6, p=0.65)], # We use our system, here the Binomial distribution, to link the observations to each event

});

likelihood| events | likelihood | |

|---|---|---|

| 0 | 0.2 | 0.354294 |

| 1 | 0.5 | 0.355957 |

| 2 | 0.8 | 0.020484 |

bayes = pd.concat([probabilities, likelihood.drop(columns=['events'])], axis=1)

bayes| events | prior_knowledge | likelihood | |

|---|---|---|---|

| 0 | 0.2 | 0.10 | 0.354294 |

| 1 | 0.5 | 0.25 | 0.355957 |

| 2 | 0.8 | 0.65 | 0.020484 |

bayes['posterior_unnormalized'] = bayes['prior_knowledge'] * bayes['likelihood']

bayes| events | prior_knowledge | likelihood | posterior_unnormalized | |

|---|---|---|---|---|

| 0 | 0.2 | 0.10 | 0.354294 | 0.035429 |

| 1 | 0.5 | 0.25 | 0.355957 | 0.088989 |

| 2 | 0.8 | 0.65 | 0.020484 | 0.013314 |

bayes['posterior'] = bayes['posterior_unnormalized'] / bayes['posterior_unnormalized'].sum()

bayes| events | prior_knowledge | likelihood | posterior_unnormalized | posterior | |

|---|---|---|---|---|---|

| 0 | 0.2 | 0.10 | 0.354294 | 0.035429 | 0.257233 |

| 1 | 0.5 | 0.25 | 0.355957 | 0.088989 | 0.646100 |

| 2 | 0.8 | 0.65 | 0.020484 | 0.013314 | 0.096667 |

Bayes for continuous random variable

Instead of considering only 3 possible events, 3 possible values to the PI, we can define a continuous set of possible events.

Let’s say that PI represents the chances of winning a chess Game.

The observable “data” is how many out of 8 games have been won.

For example if 1 out of 8 games are won, we can estimate the likelihood of that event happening for every possible PI with Binomial(k=1, n=8, p=PI)

linspace = np.linspace(0, 1, 30)

pdf = beta.pdf(x=linspace, a=3, b=3)

pdf = pdf/pdf.sum()

plt.title('prior')

plt.stem(linspace, pdf)

probabilities = pd.DataFrame({

'events': linspace,

'prior_knowledge': pdf,

});

probabilities| events | prior_knowledge | |

|---|---|---|

| 0 | 0.000000 | 0.000000 |

| 1 | 0.034483 | 0.001147 |

| 2 | 0.068966 | 0.004265 |

| 3 | 0.103448 | 0.008899 |

| 4 | 0.137931 | 0.014626 |

| 5 | 0.172414 | 0.021062 |

| 6 | 0.206897 | 0.027854 |

| 7 | 0.241379 | 0.034688 |

| 8 | 0.275862 | 0.041281 |

| 9 | 0.310345 | 0.047389 |

| 10 | 0.344828 | 0.052801 |

| 11 | 0.379310 | 0.057341 |

| 12 | 0.413793 | 0.060868 |

| 13 | 0.448276 | 0.063279 |

| 14 | 0.482759 | 0.064502 |

| 15 | 0.517241 | 0.064502 |

| 16 | 0.551724 | 0.063279 |

| 17 | 0.586207 | 0.060868 |

| 18 | 0.620690 | 0.057341 |

| 19 | 0.655172 | 0.052801 |

| 20 | 0.689655 | 0.047389 |

| 21 | 0.724138 | 0.041281 |

| 22 | 0.758621 | 0.034688 |

| 23 | 0.793103 | 0.027854 |

| 24 | 0.827586 | 0.021062 |

| 25 | 0.862069 | 0.014626 |

| 26 | 0.896552 | 0.008899 |

| 27 | 0.931034 | 0.004265 |

| 28 | 0.965517 | 0.001147 |

| 29 | 1.000000 | 0.000000 |

# We observed 1 win out of 8 games

likelihood = pd.DataFrame({

'events': linspace,

'likelihood': [

binom.pmf(k=1, n=8, p=probability) # We use our system, here the PMF of the Binomial, to link the observations to each event

for probability in linspace],

});

likelihood| events | likelihood | |

|---|---|---|

| 0 | 0.000000 | 0.000000e+00 |

| 1 | 0.034483 | 2.157805e-01 |

| 2 | 0.068966 | 3.345664e-01 |

| 3 | 0.103448 | 3.853370e-01 |

| 4 | 0.137931 | 3.904326e-01 |

| 5 | 0.172414 | 3.667370e-01 |

| 6 | 0.206897 | 3.267022e-01 |

| 7 | 0.241379 | 2.792305e-01 |

| 8 | 0.275862 | 2.304258e-01 |

| 9 | 0.310345 | 1.842292e-01 |

| 10 | 0.344828 | 1.429490e-01 |

| 11 | 0.379310 | 1.076976e-01 |

| 12 | 0.413793 | 7.874622e-02 |

| 13 | 0.448276 | 5.580707e-02 |

| 14 | 0.482759 | 3.825365e-02 |

| 15 | 0.517241 | 2.528678e-02 |

| 16 | 0.551724 | 1.605571e-02 |

| 17 | 0.586207 | 9.741451e-03 |

| 18 | 0.620690 | 5.609541e-03 |

| 19 | 0.655172 | 3.038503e-03 |

| 20 | 0.689655 | 1.529796e-03 |

| 21 | 0.724138 | 7.042960e-04 |

| 22 | 0.758621 | 2.897443e-04 |

| 23 | 0.793103 | 1.029657e-04 |

| 24 | 0.827586 | 2.998522e-05 |

| 25 | 0.862069 | 6.550372e-06 |

| 26 | 0.896552 | 9.093439e-07 |

| 27 | 0.931034 | 5.526876e-08 |

| 28 | 0.965517 | 4.477793e-10 |

| 29 | 1.000000 | 0.000000e+00 |

plt.title('likelihood')

plt.stem(likelihood['events'], likelihood['likelihood'])

bayes = pd.concat([probabilities, likelihood.drop(columns=['events'])], axis=1)

bayes| events | prior_knowledge | likelihood | |

|---|---|---|---|

| 0 | 0.000000 | 0.000000 | 0.000000e+00 |

| 1 | 0.034483 | 0.001147 | 2.157805e-01 |

| 2 | 0.068966 | 0.004265 | 3.345664e-01 |

| 3 | 0.103448 | 0.008899 | 3.853370e-01 |

| 4 | 0.137931 | 0.014626 | 3.904326e-01 |

| 5 | 0.172414 | 0.021062 | 3.667370e-01 |

| 6 | 0.206897 | 0.027854 | 3.267022e-01 |

| 7 | 0.241379 | 0.034688 | 2.792305e-01 |

| 8 | 0.275862 | 0.041281 | 2.304258e-01 |

| 9 | 0.310345 | 0.047389 | 1.842292e-01 |

| 10 | 0.344828 | 0.052801 | 1.429490e-01 |

| 11 | 0.379310 | 0.057341 | 1.076976e-01 |

| 12 | 0.413793 | 0.060868 | 7.874622e-02 |

| 13 | 0.448276 | 0.063279 | 5.580707e-02 |

| 14 | 0.482759 | 0.064502 | 3.825365e-02 |

| 15 | 0.517241 | 0.064502 | 2.528678e-02 |

| 16 | 0.551724 | 0.063279 | 1.605571e-02 |

| 17 | 0.586207 | 0.060868 | 9.741451e-03 |

| 18 | 0.620690 | 0.057341 | 5.609541e-03 |

| 19 | 0.655172 | 0.052801 | 3.038503e-03 |

| 20 | 0.689655 | 0.047389 | 1.529796e-03 |

| 21 | 0.724138 | 0.041281 | 7.042960e-04 |

| 22 | 0.758621 | 0.034688 | 2.897443e-04 |

| 23 | 0.793103 | 0.027854 | 1.029657e-04 |

| 24 | 0.827586 | 0.021062 | 2.998522e-05 |

| 25 | 0.862069 | 0.014626 | 6.550372e-06 |

| 26 | 0.896552 | 0.008899 | 9.093439e-07 |

| 27 | 0.931034 | 0.004265 | 5.526876e-08 |

| 28 | 0.965517 | 0.001147 | 4.477793e-10 |

| 29 | 1.000000 | 0.000000 | 0.000000e+00 |

bayes['posterior_unnormalized'] = bayes['prior_knowledge'] * bayes['likelihood']

bayes| events | prior_knowledge | likelihood | posterior_unnormalized | |

|---|---|---|---|---|

| 0 | 0.000000 | 0.000000 | 0.000000e+00 | 0.000000e+00 |

| 1 | 0.034483 | 0.001147 | 2.157805e-01 | 2.474344e-04 |

| 2 | 0.068966 | 0.004265 | 3.345664e-01 | 1.426927e-03 |

| 3 | 0.103448 | 0.008899 | 3.853370e-01 | 3.428955e-03 |

| 4 | 0.137931 | 0.014626 | 3.904326e-01 | 5.710550e-03 |

| 5 | 0.172414 | 0.021062 | 3.667370e-01 | 7.724121e-03 |

| 6 | 0.206897 | 0.027854 | 3.267022e-01 | 9.100016e-03 |

| 7 | 0.241379 | 0.034688 | 2.792305e-01 | 9.685814e-03 |

| 8 | 0.275862 | 0.041281 | 2.304258e-01 | 9.512211e-03 |

| 9 | 0.310345 | 0.047389 | 1.842292e-01 | 8.730425e-03 |

| 10 | 0.344828 | 0.052801 | 1.429490e-01 | 7.547798e-03 |

| 11 | 0.379310 | 0.057341 | 1.076976e-01 | 6.175448e-03 |

| 12 | 0.413793 | 0.060868 | 7.874622e-02 | 4.793160e-03 |

| 13 | 0.448276 | 0.063279 | 5.580707e-02 | 3.531407e-03 |

| 14 | 0.482759 | 0.064502 | 3.825365e-02 | 2.467421e-03 |

| 15 | 0.517241 | 0.064502 | 2.528678e-02 | 1.631038e-03 |

| 16 | 0.551724 | 0.063279 | 1.605571e-02 | 1.015987e-03 |

| 17 | 0.586207 | 0.060868 | 9.741451e-03 | 5.929470e-04 |

| 18 | 0.620690 | 0.057341 | 5.609541e-03 | 3.216545e-04 |

| 19 | 0.655172 | 0.052801 | 3.038503e-03 | 1.604348e-04 |

| 20 | 0.689655 | 0.047389 | 1.529796e-03 | 7.249540e-05 |

| 21 | 0.724138 | 0.041281 | 7.042960e-04 | 2.907406e-05 |

| 22 | 0.758621 | 0.034688 | 2.897443e-04 | 1.005051e-05 |

| 23 | 0.793103 | 0.027854 | 1.029657e-04 | 2.868023e-06 |

| 24 | 0.827586 | 0.021062 | 2.998522e-05 | 6.315411e-07 |

| 25 | 0.862069 | 0.014626 | 6.550372e-06 | 9.580713e-08 |

| 26 | 0.896552 | 0.008899 | 9.093439e-07 | 8.091876e-09 |

| 27 | 0.931034 | 0.004265 | 5.526876e-08 | 2.357215e-10 |

| 28 | 0.965517 | 0.001147 | 4.477793e-10 | 5.134663e-13 |

| 29 | 1.000000 | 0.000000 | 0.000000e+00 | 0.000000e+00 |

bayes['posterior'] = bayes['posterior_unnormalized'] / bayes['posterior_unnormalized'].sum()

bayes| events | prior_knowledge | likelihood | posterior_unnormalized | posterior | |

|---|---|---|---|---|---|

| 0 | 0.000000 | 0.000000 | 0.000000e+00 | 0.000000e+00 | 0.000000e+00 |

| 1 | 0.034483 | 0.001147 | 2.157805e-01 | 2.474344e-04 | 2.948492e-03 |

| 2 | 0.068966 | 0.004265 | 3.345664e-01 | 1.426927e-03 | 1.700363e-02 |

| 3 | 0.103448 | 0.008899 | 3.853370e-01 | 3.428955e-03 | 4.086031e-02 |

| 4 | 0.137931 | 0.014626 | 3.904326e-01 | 5.710550e-03 | 6.804838e-02 |

| 5 | 0.172414 | 0.021062 | 3.667370e-01 | 7.724121e-03 | 9.204261e-02 |

| 6 | 0.206897 | 0.027854 | 3.267022e-01 | 9.100016e-03 | 1.084381e-01 |

| 7 | 0.241379 | 0.034688 | 2.792305e-01 | 9.685814e-03 | 1.154186e-01 |

| 8 | 0.275862 | 0.041281 | 2.304258e-01 | 9.512211e-03 | 1.133500e-01 |

| 9 | 0.310345 | 0.047389 | 1.842292e-01 | 8.730425e-03 | 1.040340e-01 |

| 10 | 0.344828 | 0.052801 | 1.429490e-01 | 7.547798e-03 | 8.994150e-02 |

| 11 | 0.379310 | 0.057341 | 1.076976e-01 | 6.175448e-03 | 7.358822e-02 |

| 12 | 0.413793 | 0.060868 | 7.874622e-02 | 4.793160e-03 | 5.711652e-02 |

| 13 | 0.448276 | 0.063279 | 5.580707e-02 | 3.531407e-03 | 4.208115e-02 |

| 14 | 0.482759 | 0.064502 | 3.825365e-02 | 2.467421e-03 | 2.940243e-02 |

| 15 | 0.517241 | 0.064502 | 2.528678e-02 | 1.631038e-03 | 1.943586e-02 |

| 16 | 0.551724 | 0.063279 | 1.605571e-02 | 1.015987e-03 | 1.210676e-02 |

| 17 | 0.586207 | 0.060868 | 9.741451e-03 | 5.929470e-04 | 7.065708e-03 |

| 18 | 0.620690 | 0.057341 | 5.609541e-03 | 3.216545e-04 | 3.832917e-03 |

| 19 | 0.655172 | 0.052801 | 3.038503e-03 | 1.604348e-04 | 1.911783e-03 |

| 20 | 0.689655 | 0.047389 | 1.529796e-03 | 7.249540e-05 | 8.638738e-04 |

| 21 | 0.724138 | 0.041281 | 7.042960e-04 | 2.907406e-05 | 3.464539e-04 |

| 22 | 0.758621 | 0.034688 | 2.897443e-04 | 1.005051e-05 | 1.197645e-04 |

| 23 | 0.793103 | 0.027854 | 1.029657e-04 | 2.868023e-06 | 3.417610e-05 |

| 24 | 0.827586 | 0.021062 | 2.998522e-05 | 6.315411e-07 | 7.525606e-06 |

| 25 | 0.862069 | 0.014626 | 6.550372e-06 | 9.580713e-08 | 1.141662e-06 |

| 26 | 0.896552 | 0.008899 | 9.093439e-07 | 8.091876e-09 | 9.642487e-08 |

| 27 | 0.931034 | 0.004265 | 5.526876e-08 | 2.357215e-10 | 2.808917e-09 |

| 28 | 0.965517 | 0.001147 | 4.477793e-10 | 5.134663e-13 | 6.118597e-12 |

| 29 | 1.000000 | 0.000000 | 0.000000e+00 | 0.000000e+00 | 0.000000e+00 |

plt.title('posterior')

plt.stem(linspace, bayes['posterior'])

Using it for a regression

https://www.bayesrulesbook.com/chapter-9

So far, this is neat, but not very useful. How can we use this system for a regression ?

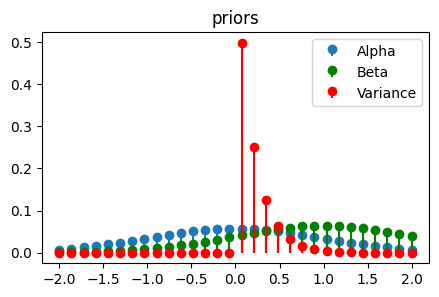

The idea is to assign things in the following way: - For a regression Yi = Gaussian(mu=Alpha + Beta * Xi, sigma=variance) (for a single Sample!!) - The “events” are going to be the possible values of Alpha and Beta - The “system” linking a likelihood to each possible events is going to be applying the linear system with the chosen Alpha, Beta and the observed Xi and Yi fo this sample.

The situation will be the following:

We have a dataset coming from a bike rental agencies. For a set of sample days, we have the number of clients and the temperature.

We will start with a trivial dataset with a single sample: - temperatures = [15] - n_clients [5]

We want to build a regression to know how those two values relate.

We will then extend to a less trivial use case with multiple samples: - temperatures = [15, 23, 22, 12] - n_clients = [5, 7, 6, 2]

from scipy.stats import expon, beta, normlinspace = np.linspace(-3, 6, 40)

alpha_pdf = norm.pdf(x=linspace, loc=0, scale=5)

alpha_pdf = alpha_pdf / alpha_pdf.sum()

beta_pdf = norm.pdf(x=linspace, loc=2, scale=5)

beta_pdf = beta_pdf / beta_pdf.sum()

gaussian_variance_pdf = expon.pdf(x=linspace, scale=0.2)

gaussian_variance_pdf = gaussian_variance_pdf / gaussian_variance_pdf.sum()

plt.title('priors')

plt.stem(linspace, alpha_pdf, label='Alpha', basefmt=" ")

plt.stem(linspace, beta_pdf, 'g', label='Beta', basefmt=" ")

plt.legend()

plt.show()

plt.stem(linspace, gaussian_variance_pdf, 'r', label='Variance', basefmt=" ")

plt.legend()

plt.show()

from IPython.display import display

probabilities_alpha = pd.DataFrame({

'event_alpha': linspace,

'prior_knowledge_alpha': alpha_pdf,

});

probabilities_beta = pd.DataFrame({

'event_beta': linspace,

'prior_knowledge_beta': beta_pdf,

});

probabilities_variance = pd.DataFrame({

'event_variance': linspace,

'prior_knowledge_variance': gaussian_variance_pdf,

});

display(probabilities_alpha.head())

display(probabilities_beta.head())

display(probabilities_variance.head())| event_alpha | prior_knowledge_alpha | |

|---|---|---|

| 0 | -3.000000 | 0.024695 |

| 1 | -2.769231 | 0.025362 |

| 2 | -2.538462 | 0.025991 |

| 3 | -2.307692 | 0.026579 |

| 4 | -2.076923 | 0.027122 |

| event_beta | prior_knowledge_beta | |

|---|---|---|

| 0 | -3.000000 | 0.017404 |

| 1 | -2.769231 | 0.018207 |

| 2 | -2.538462 | 0.019006 |

| 3 | -2.307692 | 0.019798 |

| 4 | -2.076923 | 0.020579 |

| event_variance | prior_knowledge_variance | |

|---|---|---|

| 0 | -3.000000 | 0.0 |

| 1 | -2.769231 | 0.0 |

| 2 | -2.538462 | 0.0 |

| 3 | -2.307692 | 0.0 |

| 4 | -2.076923 | 0.0 |

all_possible_events= pd.merge(

left=pd.merge(left=probabilities_alpha,

right=probabilities_beta,

how='cross'),

right=probabilities_variance,

how='cross')

all_possible_events = all_possible_events[all_possible_events.columns.sort_values()]

all_possible_events['prior'] = all_possible_events['prior_knowledge_alpha'] * all_possible_events['prior_knowledge_beta'] * all_possible_events['prior_knowledge_variance']

all_possible_events| event_alpha | event_beta | event_variance | prior_knowledge_alpha | prior_knowledge_beta | prior_knowledge_variance | prior | |

|---|---|---|---|---|---|---|---|

| 0 | -3.0 | -3.0 | -3.000000 | 0.024695 | 0.017404 | 0.000000e+00 | 0.000000e+00 |

| 1 | -3.0 | -3.0 | -2.769231 | 0.024695 | 0.017404 | 0.000000e+00 | 0.000000e+00 |

| 2 | -3.0 | -3.0 | -2.538462 | 0.024695 | 0.017404 | 0.000000e+00 | 0.000000e+00 |

| 3 | -3.0 | -3.0 | -2.307692 | 0.024695 | 0.017404 | 0.000000e+00 | 0.000000e+00 |

| 4 | -3.0 | -3.0 | -2.076923 | 0.024695 | 0.017404 | 0.000000e+00 | 0.000000e+00 |

| ... | ... | ... | ... | ... | ... | ... | ... |

| 63995 | 6.0 | 6.0 | 5.076923 | 0.014391 | 0.020837 | 6.471799e-12 | 1.940668e-15 |

| 63996 | 6.0 | 6.0 | 5.307692 | 0.014391 | 0.020837 | 2.041343e-12 | 6.121281e-16 |

| 63997 | 6.0 | 6.0 | 5.538462 | 0.014391 | 0.020837 | 6.438830e-13 | 1.930782e-16 |

| 63998 | 6.0 | 6.0 | 5.769231 | 0.014391 | 0.020837 | 2.030944e-13 | 6.090098e-17 |

| 63999 | 6.0 | 6.0 | 6.000000 | 0.014391 | 0.020837 | 6.406030e-14 | 1.920947e-17 |

64000 rows × 7 columns

# We image a system where each day, the chance of rain is represented by number_of_clients = alpha + beta * temperature

# We observed a day with 15 degrees, and 5 clients

# What is the likelihood of these observations at each possible "event" of alpha, beta and variance ?

all_possible_events_one_day = all_possible_events.copy()

likelihood = pd.DataFrame({

'likelihood': [

norm.pdf(x=5, # We observe 5 clients

loc=row['event_alpha'] + row['event_beta'] * 15, # We had 15 degrees, and every possible combinations of alpha, beta and variance

scale=row['event_variance'])

for _, row in all_possible_events_one_day.iterrows()],

}, index=all_possible_events_one_day.index);

all_possible_events_one_day['likelihood'] = likelihood

all_possible_events_one_day/usr/local/lib/python3.10/dist-packages/scipy/stats/_distn_infrastructure.py:1983: RuntimeWarning: divide by zero encountered in divide

x = np.asarray((x - loc)/scale, dtype=dtyp)| event_alpha | event_beta | event_variance | prior_knowledge_alpha | prior_knowledge_beta | prior_knowledge_variance | prior | likelihood | |

|---|---|---|---|---|---|---|---|---|

| 0 | -3.0 | -3.0 | -3.000000 | 0.024695 | 0.017404 | 0.000000e+00 | 0.000000e+00 | NaN |

| 1 | -3.0 | -3.0 | -2.769231 | 0.024695 | 0.017404 | 0.000000e+00 | 0.000000e+00 | NaN |

| 2 | -3.0 | -3.0 | -2.538462 | 0.024695 | 0.017404 | 0.000000e+00 | 0.000000e+00 | NaN |

| 3 | -3.0 | -3.0 | -2.307692 | 0.024695 | 0.017404 | 0.000000e+00 | 0.000000e+00 | NaN |

| 4 | -3.0 | -3.0 | -2.076923 | 0.024695 | 0.017404 | 0.000000e+00 | 0.000000e+00 | NaN |

| ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 63995 | 6.0 | 6.0 | 5.076923 | 0.014391 | 0.020837 | 6.471799e-12 | 1.940668e-15 | 1.350756e-71 |

| 63996 | 6.0 | 6.0 | 5.307692 | 0.014391 | 0.020837 | 2.041343e-12 | 6.121281e-16 | 1.111450e-65 |

| 63997 | 6.0 | 6.0 | 5.538462 | 0.014391 | 0.020837 | 6.438830e-13 | 1.930782e-16 | 1.720943e-60 |

| 63998 | 6.0 | 6.0 | 5.769231 | 0.014391 | 0.020837 | 2.030944e-13 | 6.090098e-17 | 6.516033e-56 |

| 63999 | 6.0 | 6.0 | 6.000000 | 0.014391 | 0.020837 | 6.406030e-14 | 1.920947e-17 | 7.462108e-52 |

64000 rows × 8 columns

all_possible_events_one_day.sort_values('likelihood', ascending=False).head()| event_alpha | event_beta | event_variance | prior_knowledge_alpha | prior_knowledge_beta | prior_knowledge_variance | prior | likelihood | |

|---|---|---|---|---|---|---|---|---|

| 56534 | 5.076923 | 0.000000 | 0.230769 | 0.017657 | 0.026489 | 0.215931 | 0.000101 | 1.635327 |

| 32574 | 1.615385 | 0.230769 | 0.230769 | 0.028062 | 0.026953 | 0.215931 | 0.000163 | 1.635327 |

| 8614 | -1.846154 | 0.461538 | 0.230769 | 0.027618 | 0.027368 | 0.215931 | 0.000163 | 1.635327 |

| 7014 | -2.076923 | 0.461538 | 0.230769 | 0.027122 | 0.027368 | 0.215931 | 0.000160 | 1.384275 |

| 30974 | 1.384615 | 0.230769 | 0.230769 | 0.028454 | 0.026953 | 0.215931 | 0.000166 | 1.384275 |

all_possible_events_one_day['posterior_unnormalized'] = all_possible_events_one_day['prior'] * all_possible_events_one_day['likelihood']

all_possible_events_one_day['posterior'] = all_possible_events_one_day['posterior_unnormalized'] / all_possible_events_one_day['posterior_unnormalized'].sum()

all_possible_events_one_day| event_alpha | event_beta | event_variance | prior_knowledge_alpha | prior_knowledge_beta | prior_knowledge_variance | prior | likelihood | posterior_unnormalized | posterior | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | -3.0 | -3.0 | -3.000000 | 0.024695 | 0.017404 | 0.000000e+00 | 0.000000e+00 | NaN | NaN | NaN |

| 1 | -3.0 | -3.0 | -2.769231 | 0.024695 | 0.017404 | 0.000000e+00 | 0.000000e+00 | NaN | NaN | NaN |

| 2 | -3.0 | -3.0 | -2.538462 | 0.024695 | 0.017404 | 0.000000e+00 | 0.000000e+00 | NaN | NaN | NaN |

| 3 | -3.0 | -3.0 | -2.307692 | 0.024695 | 0.017404 | 0.000000e+00 | 0.000000e+00 | NaN | NaN | NaN |

| 4 | -3.0 | -3.0 | -2.076923 | 0.024695 | 0.017404 | 0.000000e+00 | 0.000000e+00 | NaN | NaN | NaN |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 63995 | 6.0 | 6.0 | 5.076923 | 0.014391 | 0.020837 | 6.471799e-12 | 1.940668e-15 | 1.350756e-71 | 2.621370e-86 | 9.709554e-84 |

| 63996 | 6.0 | 6.0 | 5.307692 | 0.014391 | 0.020837 | 2.041343e-12 | 6.121281e-16 | 1.111450e-65 | 6.803496e-81 | 2.520015e-78 |

| 63997 | 6.0 | 6.0 | 5.538462 | 0.014391 | 0.020837 | 6.438830e-13 | 1.930782e-16 | 1.720943e-60 | 3.322766e-76 | 1.230753e-73 |

| 63998 | 6.0 | 6.0 | 5.769231 | 0.014391 | 0.020837 | 2.030944e-13 | 6.090098e-17 | 6.516033e-56 | 3.968328e-72 | 1.469869e-69 |

| 63999 | 6.0 | 6.0 | 6.000000 | 0.014391 | 0.020837 | 6.406030e-14 | 1.920947e-17 | 7.462108e-52 | 1.433431e-68 | 5.309429e-66 |

64000 rows × 10 columns

The posterior is the 3 dimensional joint posterior probability for every sample (alpha, beta, variance).

We can also get the marginal posterior distributions for each dimension:

all_possible_events_one_day.groupby(['event_alpha'])['posterior'].sum()event_alpha

-3.000000 0.002512

-2.769231 0.005188

-2.538462 0.012265

-2.307692 0.040148

-2.076923 0.101899

-1.846154 0.119673

-1.615385 0.061342

-1.384615 0.019318

-1.153846 0.007543

-0.923077 0.003718

-0.692308 0.001918

-0.461538 0.001131

-0.230769 0.000881

0.000000 0.000998

0.230769 0.001564

0.461538 0.002950

0.692308 0.005899

0.923077 0.013508

1.153846 0.042828

1.384615 0.105282

1.615385 0.119758

1.846154 0.059455

2.076923 0.018135

2.307692 0.006858

2.538462 0.003274

2.769231 0.001636

3.000000 0.000934

3.230769 0.000705

3.461538 0.000772

3.692308 0.001172

3.923077 0.002140

4.153846 0.004145

4.384615 0.009193

4.615385 0.028230

4.846154 0.067214

5.076923 0.074052

5.307692 0.035608

5.538462 0.010519

5.769231 0.003853

6.000000 0.001781

Name: posterior, dtype: float64plt.title('marginal posterior alpha')

marginal_alpha = all_possible_events_one_day.groupby(['event_alpha'])['posterior'].sum()

plt.stem(marginal_alpha.index, marginal_alpha)

plt.show()

plt.title('marginal posterior beta')

marginal_beta = all_possible_events_one_day.groupby(['event_beta'])['posterior'].sum()

plt.stem(marginal_beta.index, marginal_beta)

plt.show()

plt.title('marginal posterior variance')

marginal_variance = all_possible_events_one_day.groupby(['event_variance'])['posterior'].sum()

plt.stem(marginal_variance.index, marginal_variance)

plt.show()

all_possible_events_one_day_ = all_possible_events_one_day.dropna()

sampled_parameters = choice(all_possible_events_one_day_.index, size=100, p=all_possible_events_one_day_['posterior'])

sampled_parameters = all_possible_events_one_day_.loc[sampled_parameters]

def mxline(intercept, slope, start=-2, end=7):

y1 = slope*start + intercept

y2 = slope*end + intercept

plt.plot([start, end], [y1, y2])

for _, row in sampled_parameters[['event_alpha', 'event_beta']].iterrows():

mxline(row['event_alpha'], slope=row['event_beta'])

plt.show()

The limitations of our approach

Here we start seeing artifacts of our method. The marginal posterior probability of Alpha is showing spikes. Those spikes don’t exist, they are the result of the fact that we only took a limited set of points (30 x 30 x 30) in a 3 dimensional space. We essentially have a very low resolution.

Increasing the resolution is not a scalable strategy, it quickly becomes impossible to compute.

We have to go back to the formulas and use those. But the problem there is that the denominator is a huge integral with multiple dimensions. The solution will be to avoid computing the denominator, and only use the top part of the formula and some estimation method like MCMC to approximate the posterior.

Doing it for an actual dataset, with multiple days

import seaborn as sns

# Let's cheat and see what the alpha and beta values could be

sns.regplot(x=[0, 1, 5, 7, 6, 2 ], y=[5, 6, 15, 17, 18, 12], truncate=False)

import math

temperatures = [0, 1, 5, 7, 6, 2 ] # x

n_clients = [5, 6, 15, 17, 18, 12] # y

# Finding the likelihood of observing those values at every possible alpha,beta,variance events

all_possible_events_mutliple_days = all_possible_events.copy()

for i, sample in enumerate(zip(temperatures, n_clients)):

likelihood = pd.Series([

norm.pdf(x=sample[1],

loc=row['event_alpha'] + row['event_beta'] * sample[0],

scale=row['event_variance'])

for _, row in all_possible_events_mutliple_days.iterrows()],

);

all_possible_events_mutliple_days[f'likelihood_{i}'] = likelihood

# The likelihood is the product of all the daily likelihoods !

all_possible_events_mutliple_days['likelihood'] = math.prod([all_possible_events_mutliple_days[f'likelihood_{i}'] for i in range(len(temperatures))])

all_possible_events_mutliple_days

all_possible_events_mutliple_days['posterior_unnormalized'] = all_possible_events_mutliple_days['prior'] * all_possible_events_mutliple_days['likelihood']

all_possible_events_mutliple_days['posterior'] = all_possible_events_mutliple_days['posterior_unnormalized'] / all_possible_events_mutliple_days['posterior_unnormalized'].sum()

all_possible_events_mutliple_days = all_possible_events_mutliple_days.dropna()

# Once we know the posterior of each 3-dimensional event, we can gather the marginal posterior distributions

plt.title('marginal posterior alpha')

marginal_alpha = all_possible_events_mutliple_days.groupby(['event_alpha'])['posterior'].sum()

plt.stem(marginal_alpha.index, marginal_alpha)

plt.show()

plt.title('marginal posterior beta')

marginal_beta = all_possible_events_mutliple_days.groupby(['event_beta'])['posterior'].sum()

plt.stem(marginal_beta.index, marginal_beta)

plt.show()

plt.title('marginal posterior variance')

marginal_variance = all_possible_events_mutliple_days.groupby(['event_variance'])['posterior'].sum()

plt.stem(marginal_variance.index, marginal_variance)

plt.show()/usr/local/lib/python3.10/dist-packages/scipy/stats/_distn_infrastructure.py:1983: RuntimeWarning: divide by zero encountered in divide

x = np.asarray((x - loc)/scale, dtype=dtyp)

/usr/local/lib/python3.10/dist-packages/scipy/stats/_distn_infrastructure.py:1983: RuntimeWarning: divide by zero encountered in divide

x = np.asarray((x - loc)/scale, dtype=dtyp)

/usr/local/lib/python3.10/dist-packages/scipy/stats/_distn_infrastructure.py:1983: RuntimeWarning: invalid value encountered in divide

x = np.asarray((x - loc)/scale, dtype=dtyp)

/usr/local/lib/python3.10/dist-packages/scipy/stats/_distn_infrastructure.py:1983: RuntimeWarning: divide by zero encountered in divide

x = np.asarray((x - loc)/scale, dtype=dtyp)

/usr/local/lib/python3.10/dist-packages/scipy/stats/_distn_infrastructure.py:1983: RuntimeWarning: invalid value encountered in divide

x = np.asarray((x - loc)/scale, dtype=dtyp)

/usr/local/lib/python3.10/dist-packages/scipy/stats/_distn_infrastructure.py:1983: RuntimeWarning: divide by zero encountered in divide

x = np.asarray((x - loc)/scale, dtype=dtyp)

/usr/local/lib/python3.10/dist-packages/scipy/stats/_distn_infrastructure.py:1983: RuntimeWarning: divide by zero encountered in divide

x = np.asarray((x - loc)/scale, dtype=dtyp)

/usr/local/lib/python3.10/dist-packages/scipy/stats/_distn_infrastructure.py:1983: RuntimeWarning: invalid value encountered in divide

x = np.asarray((x - loc)/scale, dtype=dtyp)

/usr/local/lib/python3.10/dist-packages/scipy/stats/_distn_infrastructure.py:1983: RuntimeWarning: divide by zero encountered in divide

x = np.asarray((x - loc)/scale, dtype=dtyp)

/usr/local/lib/python3.10/dist-packages/scipy/stats/_distn_infrastructure.py:1983: RuntimeWarning: invalid value encountered in divide

x = np.asarray((x - loc)/scale, dtype=dtyp)

all_possible_events_mutliple_daysWe can sample from the posterior and visualize what regression results this would give us

sampled_parameters = choice(all_possible_events_mutliple_days.index, size=100, p=all_possible_events_mutliple_days['posterior'])

sampled_parameters = all_possible_events_mutliple_days.loc[sampled_parameters]

def mxline(intercept, slope, start=-2, end=7):

y1 = slope*start + intercept

y2 = slope*end + intercept

plt.plot([start, end], [y1, y2])

for _, row in sampled_parameters[['event_alpha', 'event_beta']].iterrows():

mxline(row['event_alpha'], slope=row['event_beta'])

plt.show()

What we start to notice, is that this method is very expensive. We are only sampling 30*30*30 points.

The more dimensions, the harder it gets to have a good precision and actually find the most interesting points, which are likely to be those where the probabilities are high.

This is why many methods, quap, MCMC, etc. exist.

Doing the same with PyMC

With a single sample day

import pymc as pm

import arviz as az

basic_model = pm.Model()

# Reminder: This is how we did it manually

# linspace = np.linspace(0, 1, 30)

# alpha_pdf = beta.pdf(x=linspace, a=3, b=3)

# alpha_pdf = alpha_pdf / alpha_pdf.sum()

# beta_pdf = beta.pdf(x=linspace, a=3, b=1)

# beta_pdf = beta_pdf / beta_pdf.sum()

# gaussian_variance_pdf = expon.pdf(x=linspace, scale=0.2)

# gaussian_variance_pdf = gaussian_variance_pdf / gaussian_variance_pdf.sum()

Y = [5] # The number of clients

X1 = [15] # The temperature

with basic_model:

# Let's do the same with PyMC

alpha = pm.Normal("alpha", 0, 5)

beta = pm.Normal("beta", 2, 5)

sigma = pm.Exponential("sigma", scale=0.2)

# Expected value of outcome

mu = alpha + beta * X1

# Likelihood (sampling distribution) of observations

Y_obs = pm.Normal("Y_obs", mu=mu, sigma=sigma, observed=Y)

trace = pm.sample(5000)

az.plot_trace(trace, figsize=(10, 10));

With multiple days

basic_model = pm.Model()

temperatures = [0, 1, 5, 7, 6, 2 ] # x

n_clients = [5, 6, 15, 17, 18, 12] # y

with basic_model:

# Let's do the same with PyMC

alpha = pm.Normal("alpha", 0, 5)

beta = pm.Normal("beta", 2, 5)

sigma = pm.Exponential("sigma", scale=0.2)

# Expected value of outcome

mu = alpha + beta * temperatures

# Likelihood (sampling distribution) of observations

Y_obs = pm.Normal("Y_obs", mu=mu, sigma=sigma, observed=n_clients)

trace = pm.sample(5000)

az.plot_trace(trace, figsize=(10, 10));

Re-exploring the initial problem, but differently

In the first problem of this notebook, we analyzed the probability of 2 events, “Rain” and “NoRain” based on some additional observerd data.

Let’s reproduce our results with PyMC, where we model the initial events as “Rain” and “NoRain”

basic_model = pm.Model()

with basic_model:

p = pm.Bernoulli("Rain", 0.5) # A prio on the Rain event

p_yes = p * 2/3 + (1-p) * 1/3

Y_obs = pm.Bernoulli("Y_obs", p=p_yes, observed=1) # The chances of observing "Friend saying it rains"

trace = pm.sample(5000)

az.plot_trace(trace, figsize=(6,3));

Another way to tackle the same problem is to consider the events to be all possible values of “p”, the probability of a binomial distribution that would represent the chances of raining.

linspace = np.linspace(0, 1, 30)

pdf = uniform.pdf(x=linspace)

pdf = pdf/pdf.sum()

plt.title('prior')

plt.stem(linspace, pdf)

plt.show()

probabilities = pd.DataFrame({

'events': linspace,

'prior_knowledge': pdf,

});

probabilities

# We observed 1 friend saying "rain"

likelihood = pd.DataFrame({

'events': linspace,

'likelihood': [

bernoulli.pmf(k=1, p=1/3*(1-probability) + 2/3*probability) # Here the system is the link between rain and the friend lying about it

for probability in linspace],

});

likelihood

plt.title('likelihood')

plt.stem(likelihood['events'], likelihood['likelihood'])

plt.show()

bayes = pd.concat([probabilities, likelihood.drop(columns=['events'])], axis=1)

bayes

bayes['posterior_unnormalized'] = bayes['prior_knowledge'] * bayes['likelihood']

bayes

bayes['posterior'] = bayes['posterior_unnormalized'] / bayes['posterior_unnormalized'].sum()

bayes

plt.title('posterior')

plt.stem(linspace, bayes['posterior'])

plt.show()

Now the same but with PyMC

basic_model = pm.Model()

with basic_model:

p = pm.Uniform("p", 0, 1) # A flat prior between 0 and 1 for the probability of rain

rain = pm.Bernoulli("rain", p=p) # we plug it in here

p_yes = rain * 2/3 + (1-rain) * 1/3

Y_obs = pm.Bernoulli("Y_obs", p=p_yes, observed=1) # The chances of observing "Friend saying it rains"

trace = pm.sample(5000)

az.plot_trace(trace, figsize=(6,3));